What about AI Agents for Quants?

Jun 29, 2025

I am astonished by how technology evolves, creating new things with an extra layer of abstraction. A couple of years ago, when I was a professional software developer, I performed many tedious tasks that can now be carried out flawlessly with the help of a LLM assistant, such as Github Copilot, Cursor. There are many more than these two, including GPT 4.1 and Gemini. The evolution of these assistants enables anyone involved in coding to enhance their performance. This is not only because tedious tasks can be delegated to these assistants, who work more quickly, but also because they make better use of our most limited resource: time. I’m currently making use of one of them.

What’s going on?

As someone interested in applying AI to finance, I’ve been tracking the evolution of LLM models that are then used for quantitative finance. The creativity of many quants and non-quants is simply impressive. As a quantitative analyst, I could not be more excited to test the scope of these applications. To this end, I have been preparing a set of experiments for a while to see how I can leverage my own models using LLM. However, many practitioners using these tools are aware that the information used to generate LLM outputs is not necessarily up to date. The programming language your models are written in is not that important; however, this time I used Python since it is the easiest language for prototyping purposes.

LLM models are a very wide topic, that I hope to talk about soon in another post, but just to have a glance I really recommend these books to get into the topic at some degree.

- Large Language Model-Based Solutions written by Shreyas Subramanian.

- Hands-On Large Language Models written by Jay Alammar and Maarten Grootendorst.

- Large Language Models Projects written by Pere Martra.

- Prompt Engineering for LLMs written by John Berryman and Albert Ziegler.

IDEs for testing

Github Copilot

For a while I’ve been using VS Code as IDE by preference for models development. It is exposed to a diversified number of extensions that leverage the development using different programming languages in the same IDE. Among other attributes, my favorite one is Github Copilot. This is an AI-powered coding assistant that may use of different LLM models like GPT 4.1 or Gemini 2.5 for development. The usage is quite intuitive after a serie of iterations, whereas IDE has received input enough to learn from context. Microsoft has improved the user experience last year as well, an appreciated gesture. Different modes are available as shown in this video. I think, the usage is almost self-explanatory once you watch the video, but I’d like to emphasize the Agent mode.

I’ve asked Github Copilot whether it uses RAG models, and output is self-explanatory, below.

GitHub Copilot does use some RAG-like techniques, but it's not a pure RAG system. Here's how it works:

🟢 **What GitHub Copilot uses:**

- Code context retrieval: Analyzes your current file, open tabs, and workspace to understand context

- Semantic search: Searches your codebase for relevant patterns and examples

- Real-time context: Incorporates your recent edits, cursor position, and file structure

- Repository-aware suggestions: Understands your project's coding patterns and dependencies

🟡 **However, it's not traditional RAG because:**

- It doesn't maintain a separate vector database of external documents

- It doesn't retrieve information from external knowledge bases in real-time

- The "retrieval" is more about code context rather than document retrieval

🟣 **The hybrid approach: GitHub Copilot combines:**

- Pre-trained knowledge from its training on public code repositories

- Context-aware retrieval from your current workspace

- LLM generation to produce relevant code suggestions

So while it uses retrieval-augmented techniques, it's more accurate to say it uses "context-aware generation" rather than pure RAG. The retrieval component is focused on your immediate coding context rather than external knowledge sources.

---

For true RAG in coding, you'd typically see systems that:

- Maintain vector databases of documentation

- Retrieve relevant docs/examples from external sources

- Combine retrieved information with LLM generation

**GitHub Copilot is more of a context-aware code completion tool that leverages both pre-trained knowledge and real-time code analysis.**

Why Github Copilot and not Claude, for instance? Well, this excercise is performed in Linux, and Claude doesn’t have package to download and install in Linux.

Models Context

Agentic AI

Agentic AI has gained popularity and it refers to AI systems designed to act autonomously with long tasks that adaptively to plan and execute sub-tasks without constant human input. Let’s put it in simple words, it likes divide and conquer. Unlike traditional AI models that respond to prompts, agentic AI operates more like software agents that can take initiative, make decisions for planing the string of sub-tasks, and revise the strategies based on feedback. These systems combine LLMs with tools, and this is my favorite part, that can be customized to not depending of last updated vector store databases (see RAG-driven generative AI by Denis Rothman, Generative AI with LangChain by Ben Auffarth, and/or AI Engineering by Chip Huyen).

The key difference between agentic AI and generic/standard AI lies in autonomy and goal-directed behavior. Whereas regular AI (like ChatGPT) excels at solving single problems or providing task-specific outputs, it lacks persistence, self-direction, and memory. Conversely, Agentic AI behaves more like a digital assistant by setting subgoals, recall past context, learn from outcomes, and even interact with humans over time. Consequently, agentic AI systems represent a major shift from AI tools that wait for instructions to systems that actively work alongside or on behalf of humans in dynamic, real world tasks.

Why RAG is important?

Well, LLM models themselve are exposed to drawbacks such as hallucination, out to date information, and the most relevant for quants IMO, unability for accessing private/dynamic information. In spite of these shortcomings, LLM models have been a fantastically complemented by RAG (Retreival-Augmented Generation) models. As Denis Rothman describes, in RAG-driven generative AI book - chapter 1, RAG model process is compraised by two stages aimed to improve accuracy of LLM responses. The first stage is called retrieval that consists on collecting relevant resources associated with the topic in context. Subsequently, the stage coined generation depicts the action of using relevant sources just collected to produce the output via LLM models. An interesting coding example of how to parse PDF docs for RAG here.

Why is then Agent Mode in GH Copilot interesting for Quants?

Intuition tells me that the answer to this question may be controversial, if not quite controversial. Let me explore from a personal point of view. One of our tasks as quants is being able to quantitatively validate, model and develop tools that can be used for different financial processes. Portfolio management, algorithmic trading, risk management, so on and so forth are included. Across this processes, we manage to optimize our resources in such a way we can dedicate the most of our time to the main goal, that is to analyse/produce models that yield an added value to portfolios in the company. Just in case, I’m not refering to regulatory aspects, but given the standarized structure in many cases, it could be included. Thereby, tools that reduce the operative aspect to do so gain terrain in our toolkit. So, why could LLM models be in our toolkit?. Straightforward, to reduce our human effort on doing manual stuff. I’ve not said anything new, since this might be quite related to DevOps in software engineering.

MCP Servers and Their Relevance for Agentic AI and RAG

MCP (Model Context Protocol) servers are specialized endpoints that enable large language models (LLMs) and agentic AI systems to interact with external tools, data sources, or computational resources in a standardized way. In the context of agentic AI and RAG, MCP servers provide a protocol for LLMs to send structured requests (such as data queries, analytics tasks, or model inference) and receive results in a predictable format.

This protocol-driven approach allows agentic systems to dynamically extend their capabilities by leveraging external services for tasks like financial calculations, data retrieval, or portfolio analytics—without embedding all logic within the LLM itself. For RAG workflows, MCP servers can act as retrieval endpoints, supplying up-to-date or proprietary information that enhance the relevance and accuracy of generated responses. In quantitative finance, using MCP servers ensures secure, auditable, and modular integration of advanced analytics into agentic or LLM-powered solutions.

Let’s get to Work!

Now that reader has the context of this post, let’s get to work!. The goal is creating a MCP server with tools that allows an user to calculate Historical Value at Risk (HVaR) based on a CSV file with specific structure and containing historical prices. For this example we’ll take as input data the file found here. It contains daily historical RGTI (Rigetti) stock prices.

Setup

The set of configurations are pretty simple. For the sake of this example, I’ve created a very small library that I can reuse in both MCP server and a jupyter notebook aimed to check the answer generated by LLM model using MCP server tools. Library is available here, and located in my_var folder, wheras server is the code in server.py file.

my-var library

Var Module

This module is responsible for HVaR and HCVaR calculations, together with return calculations. Thereby, var.py file contains two functions, one designed to calculate the returns given an array of prices and second onedesigned to calculate historical VaR and CVaR receiving the array of returns as shown below:

import numpy as np

import pandas as pd

def calculate_returns(prices:list[float]):

"""

Calculate simple returns from a sequence of prices.

"""

print(f'We are in calculate_returns function, receiving {len(prices)} data points.')

prices = np.asarray(prices).flatten()

if len(prices) < 2:

raise ValueError("At least two prices are required to calculate returns.")

returns = (prices[1:] - prices[:-1]) / prices[:-1]

return returns.tolist()

def calculate_var_cvar_historical(returns:list[float], significance_level:float=0.05):

"""

Calculate historical VaR and CVaR for a given returns series or array.

"""

try:

if not isinstance(significance_level, float):

significance_level = float(significance_level)

# print("Step 1: Converting returns to numpy array and flattening.")

returns = np.asarray(returns).flatten()

# print(returns)

# print(f"Step 2: Filtering NaNs. Input length: {len(returns)}")

returns = returns[~np.isnan(returns)]

# print(f"Step 3: After filtering NaNs. Length: {len(returns)}")

if len(returns) == 0:

raise ValueError("Returns array is empty.")

# print("Step 4: Sorting returns ascending.")

sorted_returns = np.sort(returns)

n = len(sorted_returns)

# print(f"Step 5: Number of sorted returns: {n}")

var_index = int(np.floor(significance_level * n))

var_index = max(0, min(var_index, n - 1))

# print(f"Step 6: Calculated var_index: {var_index}")

var_historical = sorted_returns[var_index]

# print(f"Step 7: VaR (historical): {var_historical}")

cvar_historical = sorted_returns[:var_index + 1].mean()

# print(f"Step 8: CVaR (historical): {cvar_historical}")

results = {

'significance_level': (1 - significance_level) * 100,

'n_points': n,

'var_historical': var_historical,

'cvar_historical': cvar_historical,

}

# print("Step 9: Returning results.")

return results

except Exception as e:

print(f"Exception occurred: {e}")

raise

Utils Module

On the other hand, the module utils.py contains one function created to read the content in CSV file, as follows. A limitation using this code is that the file must be located in the same device where server is running, and have read permissions granted. Any other setup making the server remote, will require adjustments on this codes to receive file’s content.

import pandas as pd

def extract_column_from_csv(csv_path: str, column: str, days: int, round:int=4) -> list[float]:

"""

Extract the last N values from a specified column in a CSV file containing historical prices.

Parameters:

csv_path: str, absolute path to the CSV file

column: str, column name to extract values from

days: int, number of last days to extract

Returns:

list: last N values from the specified column

"""

# Try to automatically detect decimal separator

df = pd.read_csv(csv_path, sep=',', decimal='.')

if column not in df.columns:

raise ValueError(f"Column '{column}' not found in CSV file.")

values = df[column].dropna().round(round).values

if len(values) < days:

raise ValueError(f"CSV contains only {len(values)} rows, but {days} requested.")

return values[-days:].tolist()

These two modules will allow the server to interact with GPT 4.1 which is the LLM model selected for this test since it has shown a good performance in coding tasks. I did compare the performance respect to Claude Sonnet 4 in Agent mode, and the main observed difference is response time as well as verbosity, where former displays more verbose than GPT 4.1.

MCP Server

MCP server is built using mcp library by means Model Context Protocol - Python SDK. Code below.

# server.py

from mcp.server.fastmcp import FastMCP

import math

from typing import Optional

import yfinance as yf

from my_var.var import calculate_returns, calculate_var_cvar_historical

from my_var.utils import extract_column_from_csv

import warnings

import pandas as pd

warnings.filterwarnings('ignore')

# Create an MCP server

mcp = FastMCP("Quant-MCP-server")

@mcp.tool()

def extract_column_from_csv_mcp(csv_path: str, column: str, days: int, round:int=4) -> list[float]:

"""

Extract the last N values from a specified column in a CSV file containing historical prices.

Parameters:

csv_path: str, absolute path to the CSV file

column: str, column name to extract values from

days: int, number of last days to extract

Returns:

list: last N values from the specified column

input example:

{

"csv_path": "/path/to/prices.csv",

"column": "Close",

"days": 30,

"round": 4

}

output example:

[3, 1.02, 1.05, 1.05, 1.02, 1.03, 1.05, 1.02, 1.02, 0.9864, 1.04, 0.98, 0.88, 0.9531, 0.9644, 0.8967, 0.9061, 1.05, 1.07, 0.9987, 0.9491, 0.96, 0.992, 1.04, 1.04, 1.08, 1.02, 1.08, 1.19, 1.28, 1.17, 1.09, 1.04, 1.05, 1.12, 1.02, 1.04, 1.07, 1.03, 0.9904, 1.03, 0.948, 0.8972, 0.7961, 0.8462, 0.7778, 0.8305, 0.7902, 0.8537, 0.8966, 0.8409, 0.8856, 0.8982, 0.87, 0.836, 0.9275, 0.9068, 0.9659, 0.9459, 0.91, 0.8599, 0.8807, 0.9164, 0.8312, 0.835, 0.8, 0.7536, 0.6881, 0.7336, 0.8044, 0.8202, 0.8526, 0.8216, 0.8404, 0.82, 0.8151, 0.7939, 0.765, 0.76, 0.7402, 0.7781, 0.8102, 0.7831, 0.753, 0.7644, 0.748, 0.7849, 0.7847, 0.7789, 0.7659, 0.7555, 0.8164, 0.8422, 0.8105, 0.92, 0.951, 1.11, 1.28, 1.23, 1.21, 1.2, 1.22, 1.5, 1.46, 1.41, 1.32, 1.23, 1.13, 1.12, 1.2, 1.43, 1.51, 1.52, 1.49, 1.7, 1.55, 1.41, 1.3, 1.31, 1.35, 1.48, 1.74, 2.75, 2.2, 2.4, 3.05, 3.02, 3.14, 3.11, 3.18, 4.38, 4.47, 6.49, 7.38, 5.97, 7.16, 8.43, 11.13, 10.69, 7.47, 9.37, 10.96, 11.35, 15.44, 17.08, 17.0, 15.26, 20.0, 19.02, 19.51, 18.39, 10.04, 8.93, 6.05, 8.95, 10.94, 11.24, 9.83, 13.98, 13.91, 13.47, 13.2, 12.45, 13.08, 12.66, 12.3, 13.17, 13.47, 13.72, 13.83, 13.29, 12.85, 12.35, 11.02, 11.75, 12.25, 11.81, 10.52, 11.03, 11.47, 10.75, 10.12, 9.03, 9.28, 8.03, 8.46, 7.7, 7.86, 8.18, 8.51, 9.35, 7.91, 8.05, 8.95, 8.75, 11.22, 11.16, 10.26, 9.905, 8.99, 9.07, 9.78, 9.82, 9.18, 8.47, 8.15, 7.92, 7.81, 8.49, 8.15, 7.5, 8.33, 7.72, 9.39, 9.42, 9.1, 8.86, 8.62, 8.25, 8.32, 8.11, 8.57, 9.11, 9.3, 9.37, 9.22, 8.86, 8.87, 9.14, 10.63, 9.695, 9.785, 9.25, 10.31, 10.58, 11.55, 9.865, 11.64, 11.54, 11.85, 12.05, 11.92, 10.96, 13.86, 14.02, 14.19, 14.16, 13.15, 12.11, 12.26, 12.04, 11.82, 11.3186]

"""

# Try to automatically detect decimal separator

list_prices = extract_column_from_csv(csv_path=csv_path, column=column,days=days, round=round)

print(list_prices)

return list_prices

@mcp.tool()

def calculate_returns_mcp(prices:list[float]):

"""

Calculate simple returns from a sequence of p

return calculate_var_cvar_historical(returns=returns, significance_level=significance_level)rices.

Parameters:

prices: array-like, sequence of prices

Returns:

list: returns as a list of floats

input example:

{

"prices": [21.14,21.15,21.15,21.16,21.18,21.17,21.18,21.16,21.09,21.13,21.13,21.15,21.13,21.02,21.02,21.01,21.03,21.04,21.03,21.04,21.05,21.08,21.07,21.1,21.11,21.14,21.15,21.15,21.16,21.16,21.16,21.17,21.04,21.02,21.0,20.96,20.98,20.99,20.97,21.0,20.98,20.89,20.69,20.87,20.87,20.97,20.96,20.97,20.98,20.98,21.03,21.05,20.89,20.89,20.91,20.89,20.93,20.94,20.95,21.01,21.04,21.07,21.02,21.01,21.03]

}

"""

return calculate_returns(prices=prices)

# Add an addition tool

@mcp.tool()

def calculate_var_cvar_historical_mcp(returns:list[float], significance_level:float=0.05):

"""

Calculate historical VaR and CVaR for a given returns series or array.

Parameters:

returns: array-like, series of returns

significance_level: float, confidence level (0.05 for 95% VaR/CVaR)

Returns:

dict: VaR and CVaR calculations

input example:

{

"returns": [1.14,2.1,2.5,2.1,..],

"significance_level": 0.05

}

"""

return calculate_var_cvar_historical(returns=returns, significance_level=significance_level)

@mcp.resource("yfinance-data://{ticker}/{period}")

def download_yfinance_data(ticker: str, period: str = '2y') -> dict:

"""

Download historical data from Yahoo Finance for a given ticker and period.

Parameters:

ticker: str, stock ticker symbol

period: str, data period ('1y', '2y', '5y', etc.)

Returns:

dict: Historical price data as a dictionary

"""

stock = yf.Ticker(ticker)

data = stock.history(period=period)

if data.empty:

raise ValueError(f"No data found for ticker {ticker} and period {period}")

return data.reset_index().to_dict(orient='list')

def main():

"""Main function to run the MCP server"""

mcp.run(transport='sse')

if __name__ == "__main__":

main()

Likely you’ll be impressed, as I was, when you realize that for running it you only need to execute the common python command as follows.

> python server.py

Github Copilot - Agent Mode

Adding the server in Agent mode, is also straightforward. Instructions can be found in VS Code webpage. Nonetheless, I want to make only two comments respect official documentation.

- As observed in

main()function ofserver.pyfile, I’m passing the parameter transport=”sse”. This is one of the three modes that MCP server has to communicate with MCP client (If there is a server there is always a client). So, The setup inmcp.jsonfile, that is created when selected the option Workspace Setings when adding a server ought to look like

{

"servers": {

"quant-mcp-server": {

"type": "sse",

"url": "http://127.0.0.1:8000/sse"

}

}

}

The point is that the endpoint of the added server must be /sse, otherwise you cannot connect to MCP server from Agent mode with this setup.

- When testing the server, and you disconnect it when Agent mode is using the server, it is useful to switch to

editoraskmode to let Github Copilot attempt to refresh the description of tools in MCP server.

Execution

As I said the goal is to calculate HVaR and SVaR given the input from CSV file. On this regard, the designed prompt I used to test the server via Agent mode in Github Copilot is shown below.

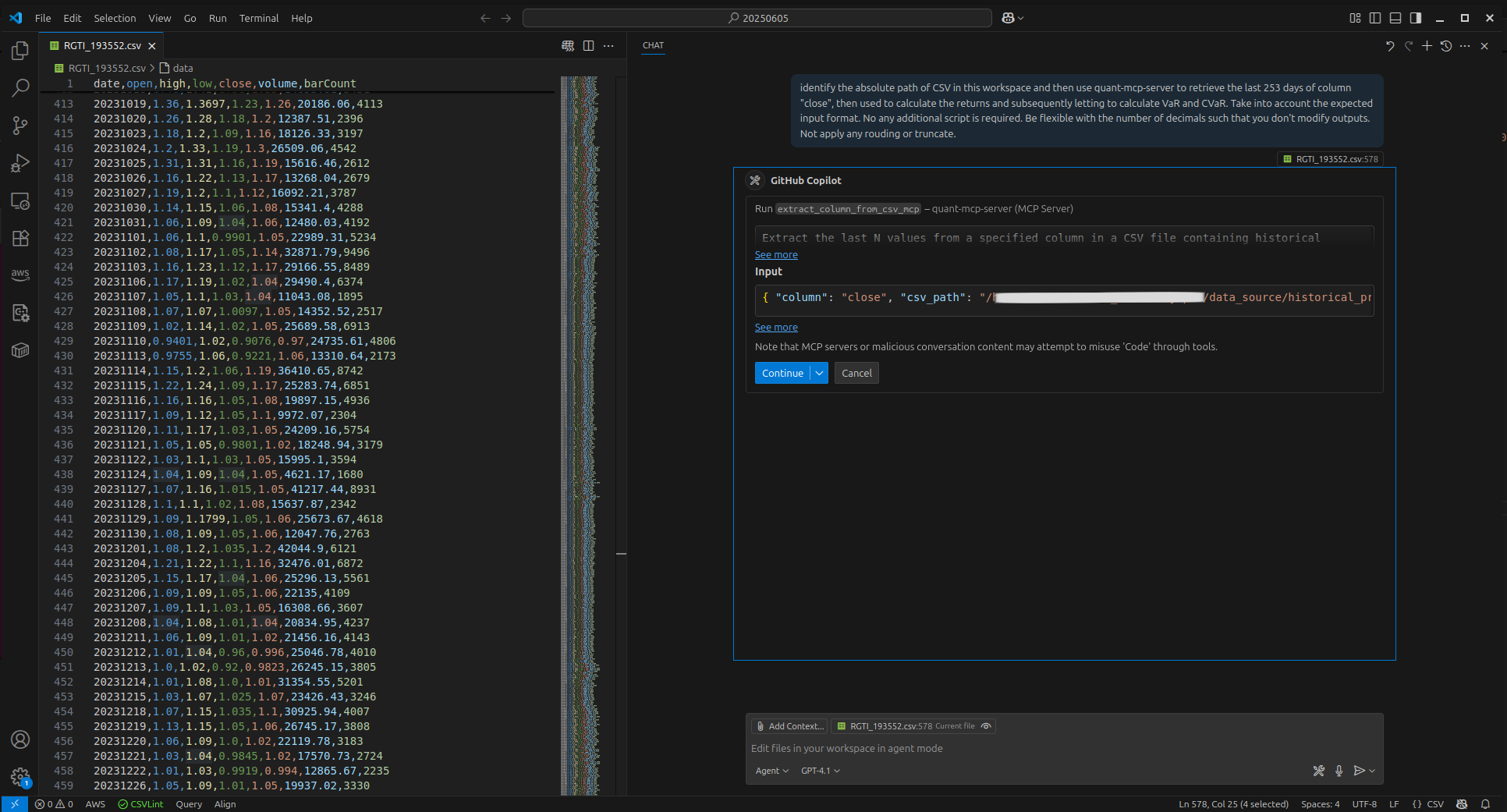

identify the absolute path of CSV in this workspace and then use quant-mcp-server to retrieve the last 253 days of column “close”, then used to calculate the returns and subsequently letting to calculate VaR and CVaR. Take into account the expected input format. No any additional script is required. Be flexible with the number of decimals such that you don’t modify outputs. Not apply any rouding or truncate.

Testing codes in Github here. Stages are described as follow.

Reading CSV file - first step

As displayed in utils.py file above, there is a function called extract_column_from_csv that receives the path to the csv file, the column name and the number of observations to be extracted. It assumes the file content depicts historical prices sorted ascending. By calling the tool extract_column_from_csv_mcp in server.py, the fucntion in utils.py is called. As result, the array with prices is collected by GPT 4.1 then passed by Github Copilot agent to next task designed by it to accomplish the goal. Image below shows the output of this first step. To let the agent continue with next task the user must press the button Continue.

Input below:

{

"column": "close",

"csv_path": "/..<your absolute path to csv file>../data_source/historical_prices/1_day_5_Y/20250605/RGTI_193552.csv",

"days": 253

}

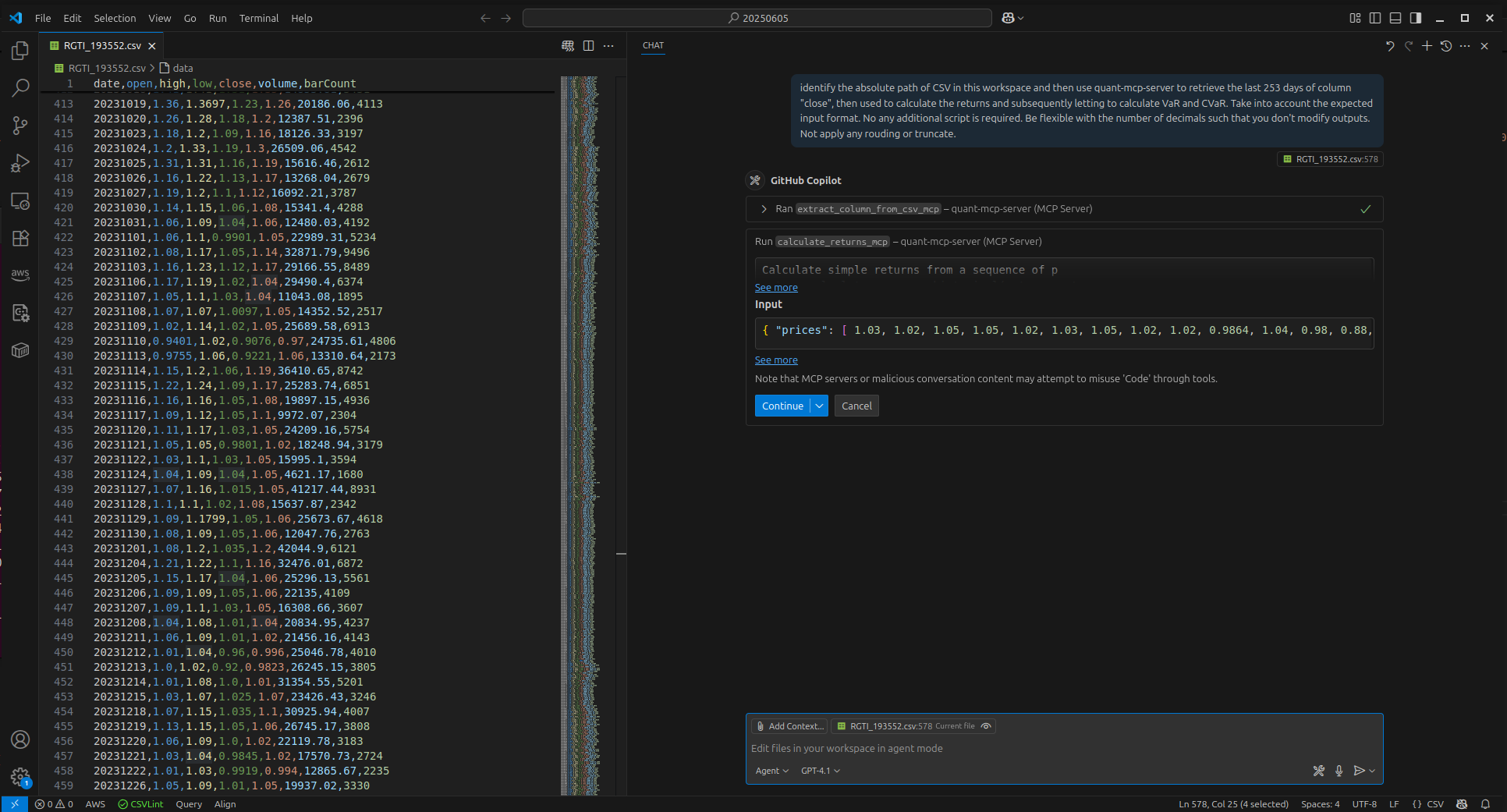

Calculating Returns - Second step

As part of the common flow to calculate Historical VaR, the returns need to be calculated. Subsequently, GH Copilot agent calls MCP tool calculate_returns_mcp. This tool internally calls the function my_var.var.calculate_var_cvar_historical receiving as parameter the list of prices, obtained from call MCP tool extract_column_from_csv_mcp. Image below shows the input to be sent to the second task, passing as parameter the json with prices. Click to button Continue is expected to proceed.

Input below: s

{

"prices": [

1.03, 1.02, 1.05, 1.05, 1.02, 1.03, 1.05, 1.02, 1.02, 0.9864, 1.04, 0.98, 0.88, 0.9531, 0.9644,

0.8967, 0.9061, 1.05, 1.07, 0.9987, 0.9491, 0.96, 0.992, 1.04, 1.04, 1.08, 1.02, 1.08, 1.19, 1.28,

1.17, 1.09, 1.04, 1.05, 1.12, 1.02, 1.04, 1.07, 1.03, 0.9904, 1.03, 0.948, 0.8972, 0.7961, 0.8462,

0.7778, 0.8305, 0.7902, 0.8537, 0.8966, 0.8409, 0.8856, 0.8982, 0.87, 0.836, 0.9275, 0.9068, 0.9659,

0.9459, 0.91, 0.8599, 0.8807, 0.9164, 0.8312, 0.835, 0.8, 0.7536, 0.6881, 0.7336, 0.8044, 0.8202,

0.8526, 0.8216, 0.8404, 0.82, 0.8151, 0.7939, 0.765, 0.76, 0.7402, 0.7781, 0.8102, 0.7831, 0.753,

0.7644, 0.748, 0.7849, 0.7847, 0.7789, 0.7659, 0.7555, 0.8164, 0.8422, 0.8105, 0.92, 0.951, 1.11,

1.28, 1.23, 1.21, 1.2, 1.22, 1.5, 1.46, 1.41, 1.32, 1.23, 1.13, 1.12, 1.2, 1.43, 1.51, 1.52, 1.49,

1.7, 1.55, 1.41, 1.3, 1.31, 1.35, 1.48, 1.74, 2.75, 2.2, 2.4, 3.05, 3.02, 3.14, 3.11, 3.18, 4.38,

4.47, 6.49, 7.38, 5.97, 7.16, 8.43, 11.13, 10.69, 7.47, 9.37, 10.96, 11.35, 15.44, 17.08, 17, 15.26,

20, 19.02, 19.51, 18.39, 10.04, 8.93, 6.05, 8.95, 10.94, 11.24, 9.83, 13.98, 13.91, 13.47, 13.2,

12.45, 13.08, 12.66, 12.3, 13.17, 13.47, 13.72, 13.83, 13.29, 12.85, 12.35, 11.02, 11.75, 12.25,

11.81, 10.52, 11.03, 11.47, 10.75, 10.12, 9.03, 9.28, 8.03, 8.46, 7.7, 7.86, 8.18, 8.51, 9.35, 7.91,

8.05, 8.95, 8.75, 11.22, 11.16, 10.26, 9.905, 8.99, 9.07, 9.78, 9.82, 9.18, 8.47, 8.15, 7.92, 7.81,

8.49, 8.15, 7.5, 8.33, 7.72, 9.39, 9.42, 9.1, 8.86, 8.62, 8.25, 8.32, 8.11, 8.57, 9.11, 9.3, 9.37,

9.22, 8.86, 8.87, 9.14, 10.63, 9.695, 9.785, 9.25, 10.31, 10.58, 11.55, 9.865, 11.64, 11.54, 11.85,

12.05, 11.92, 10.96, 13.86, 14.02, 14.19, 14.16, 13.15, 12.11, 12.26, 12.04, 11.82, 11.3186

]

}

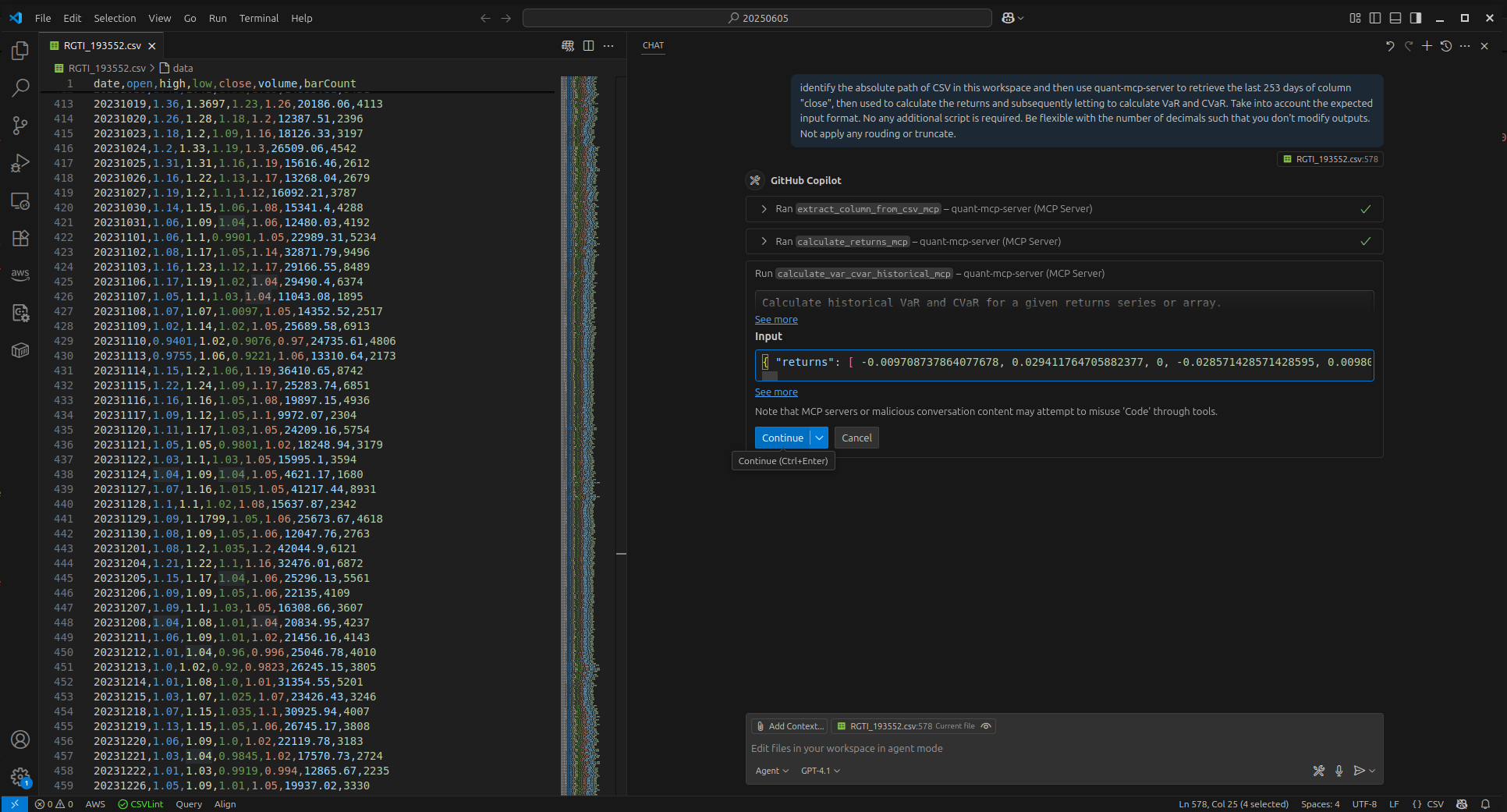

Calculating Returns - Third step

The last step is calling the MCP tool named calculate_var_cvar_historical_mcp receiving as parameter the returns obtained in second step, together with significance level.

Input below:

{

"returns": [

-0.009708737864077678, 0.029411764705882377, 0, -0.028571428571428595, 0.00980392156862746,

0.019417475728155355, -0.028571428571428595, 0, -0.032941176470588196, 0.05433901054339008,

-0.057692307692307744, -0.10204081632653059, 0.08306818181818175, 0.011856048683244243, -0.07019908751555369,

0.010482881677261028, 0.1588124931023066, 0.019047619047619063, -0.06663551401869161, -0.049664563933113026,

0.011484564324096417, 0.03333333333333337, 0.04838709677419359, 0, 0.03846153846153849,

-0.0555555555555556, 0.058823529411764754, 0.10185185185185174, 0.0756302521008404, -0.08593750000000007,

-0.06837606837606826, -0.04587155963302756, 0.009615384615384623, 0.06666666666666672, -0.08928571428571436,

0.01960784313725492, 0.028846153846153872, -0.03738317757009349, -0.03844660194174765, 0.03998384491114709,

-0.07961165048543696, -0.05358649789029531, -0.11268390548372711, 0.06293179248838075, -0.08083195462065695,

0.06775520699408584, -0.04852498494882601, 0.0803594026828651, 0.050251844910389996, -0.06212357796118668,

0.05315733143061015, 0.014227642276422701, -0.03139612558450234, -0.03908045977011498, 0.10944976076555027,

-0.022318059299191312, 0.06517423908248779, -0.020706077233668102, -0.03795327201606928, -0.05505494505494509,

0.02418885916967094, 0.04053593732258425, -0.09297250109122647, 0.004571703561116355, -0.041916167664670566,

-0.057999999999999996, -0.08691613588110403, 0.06612410986775176, 0.09651035986913846, 0.019641969169567425,

0.03950256035113385, -0.03635937133474083, 0.022882181110029258, -0.02427415516420762, -0.005975609756097445,

-0.026009078640657584, -0.0364025695931478, -0.006535947712418306, -0.02605263157894742, 0.05120237773574716,

0.04125433748875468, -0.03344853122685758, -0.038436981228451045, 0.015139442231075651, -0.02145473574044999,

0.04933155080213909, -0.0002548095298765308, -0.007391359755320398, -0.016690204134035193, -0.013578796187491938,

0.08060886829913974, 0.03160215580597738, -0.03763951555450006, 0.13510178901912404, 0.03369565217391295,

0.16719242902208217, 0.1531531531531531, -0.039062500000000035, -0.016260162601626032, -0.008264462809917363,

0.016666666666666684, 0.2295081967213115, -0.02666666666666669, -0.034246575342465786, -0.06382978723404245,

-0.06818181818181823, -0.08130081300813015, -0.008849557522123706, 0.07142857142857129, 0.19166666666666665,

0.055944055944056, 0.006622516556291397, -0.019736842105263174, 0.14093959731543623, -0.08823529411764701,

-0.09032258064516137, -0.07801418439716304, 0.007692307692307699, 0.030534351145038195, 0.09629629629629621,

0.17567567567567569, 0.5804597701149425, -0.19999999999999993, 0.09090909090909079, 0.2708333333333333,

-0.009836065573770428, 0.03973509933774838, -0.009554140127388614, 0.022508038585209094, 0.37735849056603765,

0.02054794520547942, 0.45190156599552583, 0.13713405238828963, -0.1910569105691057, 0.19932998324958132,

0.1773743016759776, 0.32028469750889693, -0.039532794249775495, -0.3012160898035547, 0.25435073627844706,

0.16969050160085397, 0.0355839416058393, 0.360352422907489, 0.1062176165803108, -0.00468384074941442,

-0.1023529411764706, 0.3106159895150721, -0.049, 0.025762355415352364, -0.057406458226550536,

-0.4540511147362698, -0.11055776892430275, -0.322508398656215, 0.4793388429752065, 0.22234636871508384,

0.027422303473491838, -0.12544483985765126, 0.422177009155646, -0.005007153075822624, -0.031631919482386736,

-0.020044543429844196, -0.05681818181818182, 0.05060240963855428, -0.03211009174311926, -0.028436018957345925,

0.07073170731707311, 0.022779043280182286, 0.01855976243504083, 0.00801749271137022, -0.039045553145336295,

-0.033107599699021786, -0.038910505836575876, -0.1076923076923077, 0.06624319419237754, 0.0425531914893617,

-0.035918367346938734, -0.10922946655376807, 0.04847908745247147, 0.039891205802357325, -0.06277244986922412,

-0.05860465116279077, -0.10770750988142291, 0.027685492801771874, -0.13469827586206898, 0.0535491905354921,

-0.08983451536643033, 0.020779220779220797, 0.040712468193384144, 0.04034229828850857, 0.09870740305522913,

-0.15401069518716573, 0.01769911504424786, 0.11180124223602465, -0.02234636871508372, 0.28228571428571436,

-0.0053475935828877445, -0.0806451612903226, -0.0346003898635478, -0.09237758707723365, 0.008898776418242499,

0.07828004410143319, 0.004089979550102344, -0.06517311608961308, -0.07734204793028313, -0.037780401416765086,

-0.028220858895705574, -0.01388888888888893, 0.08706786171574912, -0.04004711425206123, -0.0797546012269939,

0.11066666666666668, -0.07322929171668671, 0.21632124352331616, 0.00319488817891367, -0.033970276008492596,

-0.026373626373626398, -0.027088036117381517, -0.04292343387470989, 0.008484848484848519, -0.025240384615384717,

0.05672009864364992, 0.06301050175029162, 0.020856201975850856, 0.007526881720429947, -0.01600853788687285,

-0.03904555314533635, 0.0011286681715575381, 0.030439684329199704, 0.16301969365426697, -0.08795860771401698,

0.009283135636926235, -0.05467552376085847, 0.11459459459459465, 0.026188166828321976, 0.0916824196597354,

-0.14588744588744593, 0.1799290420679169, -0.008591065292096342, 0.026863084922010443, 0.016877637130801777,

-0.010788381742738653, -0.08053691275167778, 0.2645985401459853, 0.011544011544011554, 0.012125534950071322,

-0.0021141649048625343, -0.07132768361581919, -0.07908745247148295, 0.012386457473162705, -0.017944535073409516,

-0.018272425249169343, -0.04241962774957701

],

"significance_level": 0.05

}

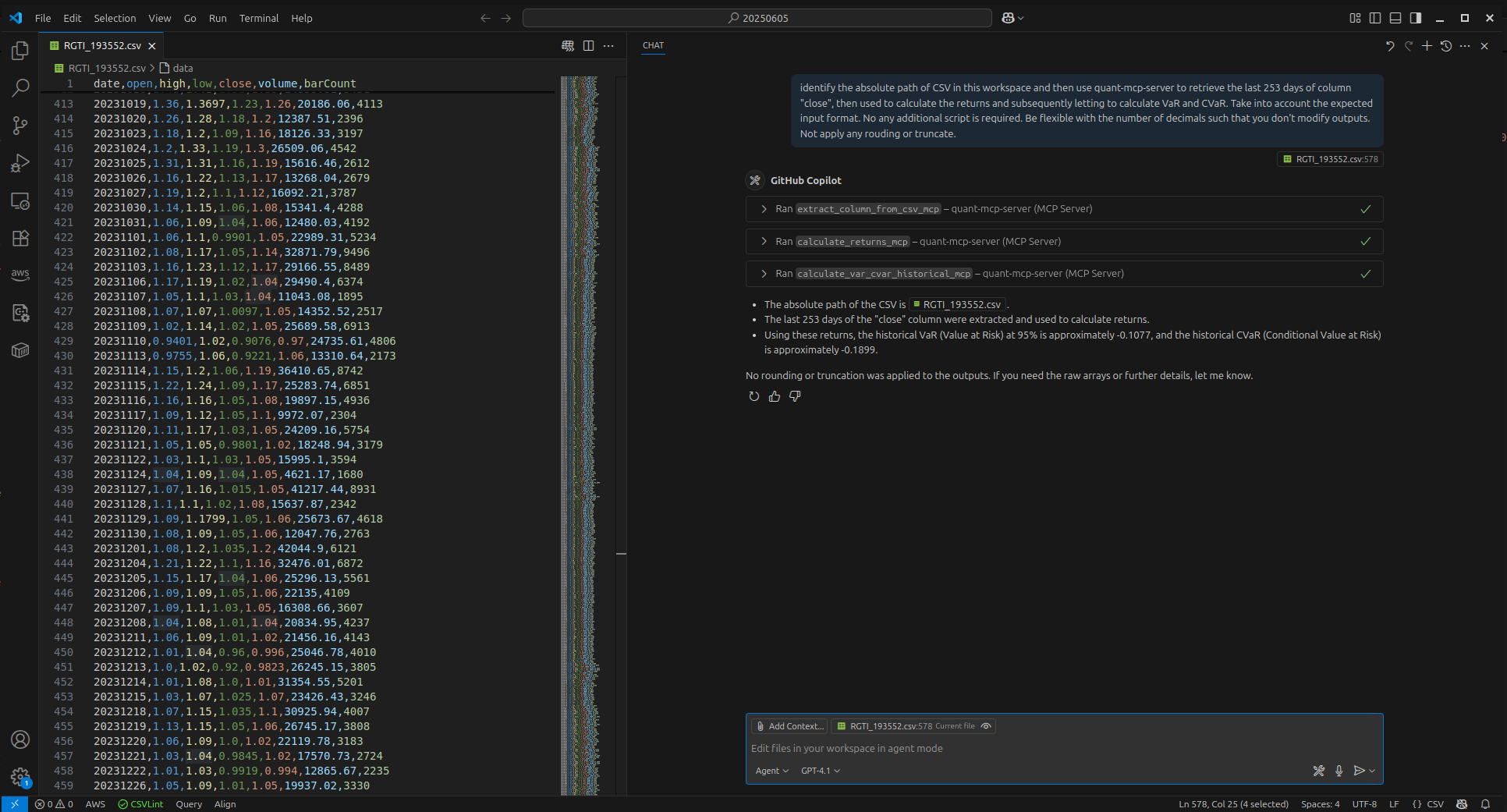

Results

As result the VaR is express by GPT 4.1. I should confess that in this ocassion I expected to see the VaR in percentage format, but LLM model did not interpret it in that way. Instead, the decimal format was shown, such that the HVaR calculated given the functions above, is about -10.77% and HCVaR is about -18.9%. Result is shown below.

Moreover, a checking was performed by running the functions in a jupyter notebook calling the same functions in the same order. Result is shown in my Github repositoy, here. As expected the result is the same as displayed in Github Copilot chat.

Takeaways

The process of creating tools is fairly straightforward. However, designing libraries that expose functions, classes and modules via an MCP server appears to offer advantages. There is no doubt about the potential usefulness of exposing existing modules via LLM models using MCP servers. The challenge therefore lies in structuring an effective prompt that enables the agent to develop a robust plan for executing tools in the expected order. Prompt engineering is therefore essential, particularly when a large number of tools are deployed.

Similarly, strategically designing tools helps to avoid confusing the agent. Tools with similar names or descriptions may produce undesirable results.